About

NEO-QE is the acronym for the project entitled “NEtwork-aware Optimization for Query Executions in Large Systems” and granted by the EU H2020 Marie Skłodowska-Curie actions (MSCA) Individual fellowships (IF). The individual research in liaison with the host organization University College Dublin (UCD) will last for two years (2018-2020).

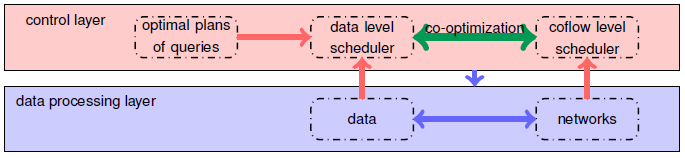

Network communications is one of the main performance challenges for big data computing in large distributed systems such as datacenters, in terms of both communication time and energy consumption. Significant improvements have been achieved by using the state-of-the-art methods, designed in the research domains of data management, data communications and network management. However, almost all the techniques in their own fields just view each fields as a black box, and the additional performance gains from a co-optimization perspective have not yet been well explored.

The fellow Long Cheng is working at UCD to tackle the challenges.

The Architecture and Tasks

The main tasks of NEO-QE are:

- Complex data queries (e.g., parallel queries, online queries).

- Service-level requirements (e.g., priority, latency, deadline).

- Complex network topologies (e.g., routing, congestion).

- Complex computing environments (e.g., Cloud).

- Energy consumption (minimized at constraints).

- Applications (e.g., bioinformatics workloads, large-scale machine learning).

Scientific Publications Supported by the Project

(All publications are openly accessible at my ResearchGate)

-

Xuan Chen, Long Cheng (CA), Cong Liu, Qingzhi Liu, Jinwei Liu, Ying Mao, John Murphy. A WOA-Based Optimization Approach for Task Scheduling in Cloud Computing Systems. IEEE Systems Journal, 2019 (in press).

-

Long Cheng, Boudewijn van Dongen, Wil van der Aalst. Scalable Discovery of Hybrid Process Models in a Cloud Computing Environment. IEEE Transactions on Services Computing, 2019 (in press).

-

Long Cheng, Spyros Kotoulas, Qingzhi Liu, Ying Wang. Load-balancing Distributed Outer Joins through Operator Decomposition. Journal of Parallel and Distributed Computing, 132: 21-35, 2019.

-

Wenjia Zheng, Michael Tynes, Henry Gorelick, Ying Mao, Long Cheng, Yantian Hou. FlowCon: Elastic Flow Configuration for Containerized Deep Learning Applications. ICPP’19: Proc. 48th International Conference on Parallel Processing, pp. 87:1-87:10, Kyoto, Japan, Aug 2019.

-

Dawen Xu, Kouzi Xing, Cheng Liu, Ying Wang, Yulin Dai, Long Cheng, Huawei Li, Lei Zhang. Resilient Neural Network Training for Accelerators with Computing Errors. ASAP’19: Proc. 30th IEEE International Conference on Application-specific Systems, Architectures and Processors, pp. 99-102, New York, USA, July 2019.

-

Long Cheng, Cong Liu, Qingzhi Liu, Yucong Duan, John Murphy. Learning Process Models in IoT Edge. SERVICES’19: Proc. 2019 IEEE World Congress on Services, pp. 146-149, Milan, Italy, July 2019.

-

Qingzhi Liu, Long Cheng, Tanir Ozcelebi, John Murphy, Johan Lukkien. Deep Reinforcement Learning for IoT Network Dynamic Clustering in Edge Computing. CCGrid’19: Proc. 19th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing, pp. 600-603, Larnaca, Cyprus, May 2019.

-

Long Cheng, John Murphy, Qingzhi Liu, Chunliang Hao, Georgios Theodoropoulos. Minimizing Network Traffic for Distributed Joins Using Lightweight Locality-Aware Scheduling. Euro-Par’18: Proc. 24th European Conference on Parallel Processing, pp. 293-305, Turin, Italy, Aug 2018.

Workshop Supported by the Project

The 2019 International Workshop on Network-Aware Big Data Computing (NEAC 2019), co-located with IEEE/ACM CCGrid.